Image credit: Frontiers Science News

OPTIONAL THEME:

KNOWLEDGE AND TECHNOLOGY

ARTIFICIAL INTELLIGENCE

AI is already transforming human knowing. It harbors opportunities for quality of life and cognitive enhancement; as well as existential dangers.

This unit builds on You are the product and Chess algorithm—AlphaZero. The generative questions in these units touched upon:

—Our ubiquitous digital devices viewed as cognitive extensions

—Social media algorithms modifying our attentional behavior for profit and/or political influence

—Societal implications for AI replacing human workers on a large scale

ACKNOWLEDGEMENT AND GRATITUDE

The contents and scope of this unit was chiefly inspired by the work of Stuart Russell who is Professor of Computer Science at the University of California, Berkeley and Adjunct Professor of Neurological Surgery at the University of California, San Francisco. He is the co-author of Artificial Intelligence: A Modern Approach, the standard college textbook in the field. Stuart Russell’s recent paperback Human Compatible: Artificial Intelligence and the Problem of Control is a terrific read and also strongly influenced this TOK teaching unit.

CLASS ACTIVITY I:

THE TURING TEST AND THE CHINESE ROOM

Let’s begin with an encounter with two classic AI thought experiments. Students may be familiar with Alan Turing via the The Imitation Game (2014) and the placement of his portrait on the Bank of England £50 note.

Next is a BBC recreation of philosopher John Searle’s Chinese Room thought experiment.

Finally—before students individually tackle the four generative tasks, and respond to the six generative questions—introduce them to Cassie from Agility Robotics.

FOUR GENERATIVE TASKS…

1. Describe the Turing Test in a maximum of 50 words.

2. Complete the following task after using first using Google Translate to translate it from Samoan into English:

Faʻamatala le Potu Saina i se Tweet

3. Using only mental arithmetic calculate how many hours in a week?

4. Now use pen and paper to organize your thoughts, calculate how many picoseconds* in a leap year. Write down your answer, so you don’t forget it. Next, use the electronic calculator app on your phone or laptop to determine the accuracy of your response.

*A picosecond is equal to 1⁄1 000 000 000 000 of a second.

…AND SIX Generative questionS

5. To what extent does your calculator understand math?

6. To what extent does does Google Translate speak Chinese?

7. To what extent does Cassie know how to walk?

8. Closely reread the instructions for Task #4—what underlying assumptions were being made?

9. Is the Chinese Room a subset of the Turing Test?

10. Quickly research online the difference between “syntax” and “semantics.” How does this demarcation play into the Chinese Room thought experiment?

The Bank of England issued its new £50 banknote for the first time on 23 June 2021, which coincided with Alan Turing’s birthday.

CLASS ACTIVITY II:

CAN A MACHINE KNOW?

This activity builds on the previous one. If they happen to fall on different days—briefly revisit what emerged from the encounter with the Turing Test, the Chinese Room and the four generative tasks.

Arrange the class into two round table discussion groups. Each large group will appoint a facilitator, a scribe, and a trio of students who will report back to the whole class. Then unleash a full 8 minutes of large group exploration of the following fundamental Knowledge Question:

In what sense, if any, can we assert that a machine can know something?

Provide a three minute warning, but discombobulate students by cutting the conversations a little sooner. Choose your moment. Break the fourth wall and tell them that you are doing this purposefully to create tension and momentum for the whole class discussion.

Before asking the trios from each group to report back, encourage students to instantiate their claims with cogent examples and remind them of the power of engaging with any emergent counterclaims. In short: encourage a lively, thoughtful to-and-fro.

Here is a collection of challenging additional knowledge questions that may or may not be necessary to help students focus their emergent thinking.

In what ways, if any, have technological advances allowed knowledge to reside outside of human knowers?

What demarcates knowledge from mere information processing?

Is carbon based embodiment essential for knowing and knowledge?

It is highly probable that students will surface engaging hot topics like sentience in animals, aliens from distant planets with advanced technologies, and robots with human characteristics, as they explore the knowledge questions. Expect them also to reference examples from sci-fi movies and Netflix series featuring AI.

Some of the existential risks of AI—including the “singularity” concept will likely emerge. We will delve more deeply into these ideas next.

Face recognition graphic

Photo: Getty Images

CLASS ACTIVITY III:

ARTIFICIAL GENERAL INTELLIGENCE (AGI)

The most eminent neuroscientists and philosophers do not agree on the nature of consciousness. The inescapable fact that, as sentient beings, we are each in the—ultimately, incommunicable—bubble of our own subjectivity plays into the difficulty of addressing whether, or not, machines will eventually become self aware.

Whilst the thorniness of the AI consciousness issue provides intriguing perspectives on what we can and cannot know, the various speculative arguments tend to be beyond the scope of 100 hours of TOK!

Artificial general intelligence (AGI) is still hypothetical. It foretells of intelligent machines that will understand or learn any intellectual task that a human being can. The AGI concept in current usage does not require the complication of consciousness.

Weak (or narrow) AI is where where our technologies stand at the moment. Weak AI includes applications like Siri and Alexa, Google Translate, and advanced specialized programs that learn rapidly like AlphaZero, ChatGBT5 and DeepSeek.

A SELECTION OF ICONIC AI QUOTES

Next perform a class reading of the following historical quotations. Each has contributed to speculative thinking about a future AGI “singularity” and its associated existential risk. Start by having the students read all of them in silence. Then press four volunteers for a public reading of each quote, including the attributions. Solicit class reactions after each quote in turn. Quotes #2 and #3 should provoke animated responses.

#1

If a machine can think it might think more intelligently than we do, and then where should we be? Even if we could keep the machines in a subservient position, for instance by turning off the power at strategic moments, we should as a species feel greatly humbled.

—Turing, Alan (May 15, 1951) Can Digital Computers Think? Broadcast Talk. BBC Radio 3, UK.

#2

The ever accelerating progress of technology ...gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.

—Ulam, Stanislaw. (May 1958) Tribute to John von Neumann. Bulletin of the American Mathematical Society, 64 (3, part 2):1–49.

Perils of the Paperclip Maximizer

Image credit: Bart van der Mark

#3

It also seems perfectly possible to have a superintelligence whose sole goal is something completely arbitrary, such as to manufacture as many paperclips as possible, and who would resist with all its might any attempt to alter this goal ...with the consequence that it starts transforming first all of earth and then increasing portions of space into paperclip manufacturing facilities.

—Nick Bostrom (2003) Cognitive, Emotive and Ethical Aspects of Decision Making in Humans and in Artificial Intelligence. Vol. 2, ed. I. Smit et al., Int. Institute of Advanced Studies in Systems Research and Cybernetics, 2003, pp. 12-17.

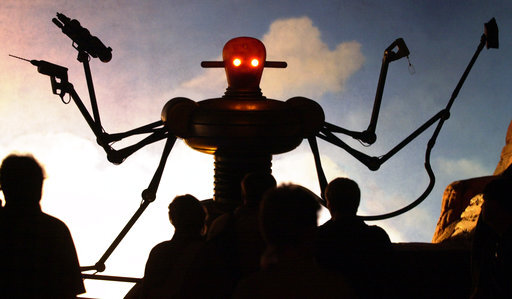

Visitors to the Planet of Visions robot display at the Hanover Expo 2000 Image: AP Photo/Fabian Bimmer

#4

Let’s start with the three fundamental rules of robotics – the three rules that are built most deeply into a robot’s positronic brain… One, a robot may not injure a human being under any conditions—and as a corollary, must not permit a human being to be injured because of inaction on his part... Two, a robot must follow all orders given by qualified human beings as long as they do not conflict with Rule 1. Three: a robot must protect his own existence as long as that does not conflict with Rules 1 and 2.

—Asimov, Isaac (1942) Runaround in Astounding Science Fiction March 1942: 100 Street and Smith Comic magazine, New York.

CLASS ACTIVITY IV:

AUTONOMOUS SWARMING SLAUGHTERBOTS—

BE VERY AFRAID!

1. Without providing any introductory commentary show this dystopian Slaughterbots video created for the Stop Autonomous Weapons initiative. Pause the video at various moments during the faux TEDTalk section, asking the universal generative question:

What is going on here?

2. Ask students to visit the autonomousweapons.org homepage and ask them to respond to the website’s generative question?

“What can be done?”

3. For decades Professor Stuart Russell has been a leading contributor in the field of Artificial Intelligence. He appears in person at the very end of the Slaughterbots video.

Ask a student to read aloud the Professor Russell’s quotation below from his 2019 book. Then ask students to read it for themselves in silence. Finally, unpack the text line-by-line keeping the following generative question to the fore:

According to Stuart Russell, what are some of the implications of misaligning human objectives with the objectives of superintelligent, autonomous machines?

“Midas, a legendary king in ancient Greek mythology, got exactly what he asked for— namely, that everything he touched turned to gold. Too late, he discovered that this included his food, his drink, and his family members, and he died in misery and starvation. The same theme is ubiquitous in human mythology… Goethe’s tale of the sorcerers apprentice, who instructs a broom to fetch water—but doesn’t say how much water and doesn’t know how to make the broom stop.

A technical way of saying this is that we may suffer from a failure of value alignment—we may perhaps inadvertently, imbue machines with objectives that are imperfectly aligned with our own. Until recently we were shielded from the potentially catastrophic consequences by the limited capabilities machines and the limited scope that they have to affect the world.”